Back

The FlowX.AI Blog

Data Access

Orchestration

Integration

AI Agents

–

Dec 16, 2025

Your AI Strategy Is Only as Strong as Your Integration Layer

The AI Demo Problem

Most leadership teams have now watched the same demo: an agentic AI prototype that looks like the future, until someone asks the least glamorous question in the room: “Where does it get the data?” That’s when the prototype quietly reveals itself for what it is: a smart interface on top of a curated dataset, not a capability wired into the systems that actually run the business.

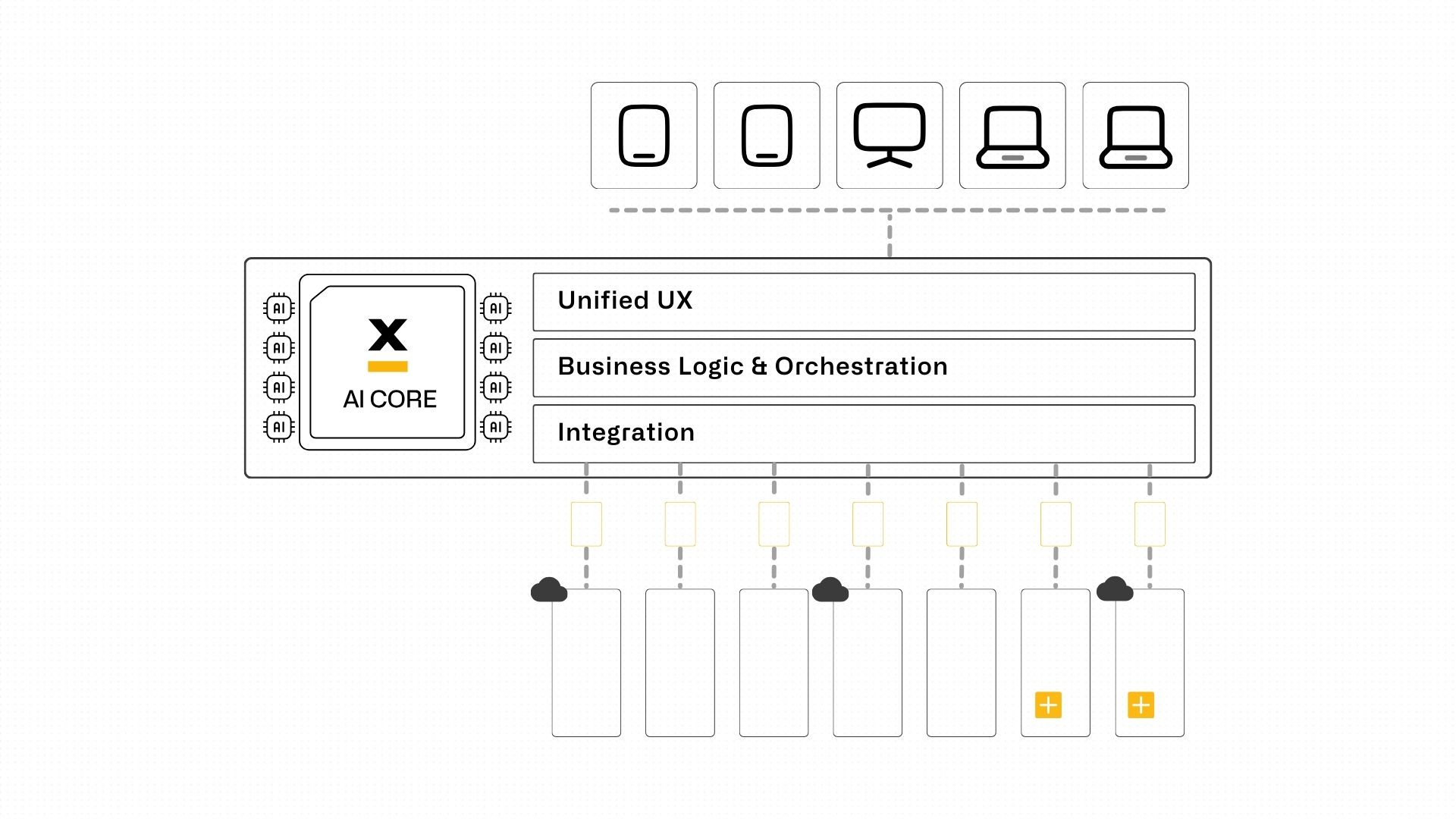

This is the uncomfortable truth about enterprise AI: model capability is no longer the bottleneck. Access is. If your AI can’t reliably reach the right data at the right time, through controlled, production-grade pathways, your strategy stays stuck at the “impressive pilot” stage. And in regulated environments, “access” doesn’t mean a one-off API call. It means a governed integration layer that can scale across systems, teams, and use cases without turning every new idea into a quarter-long integration saga.

Why Integration Is the Real Constraint

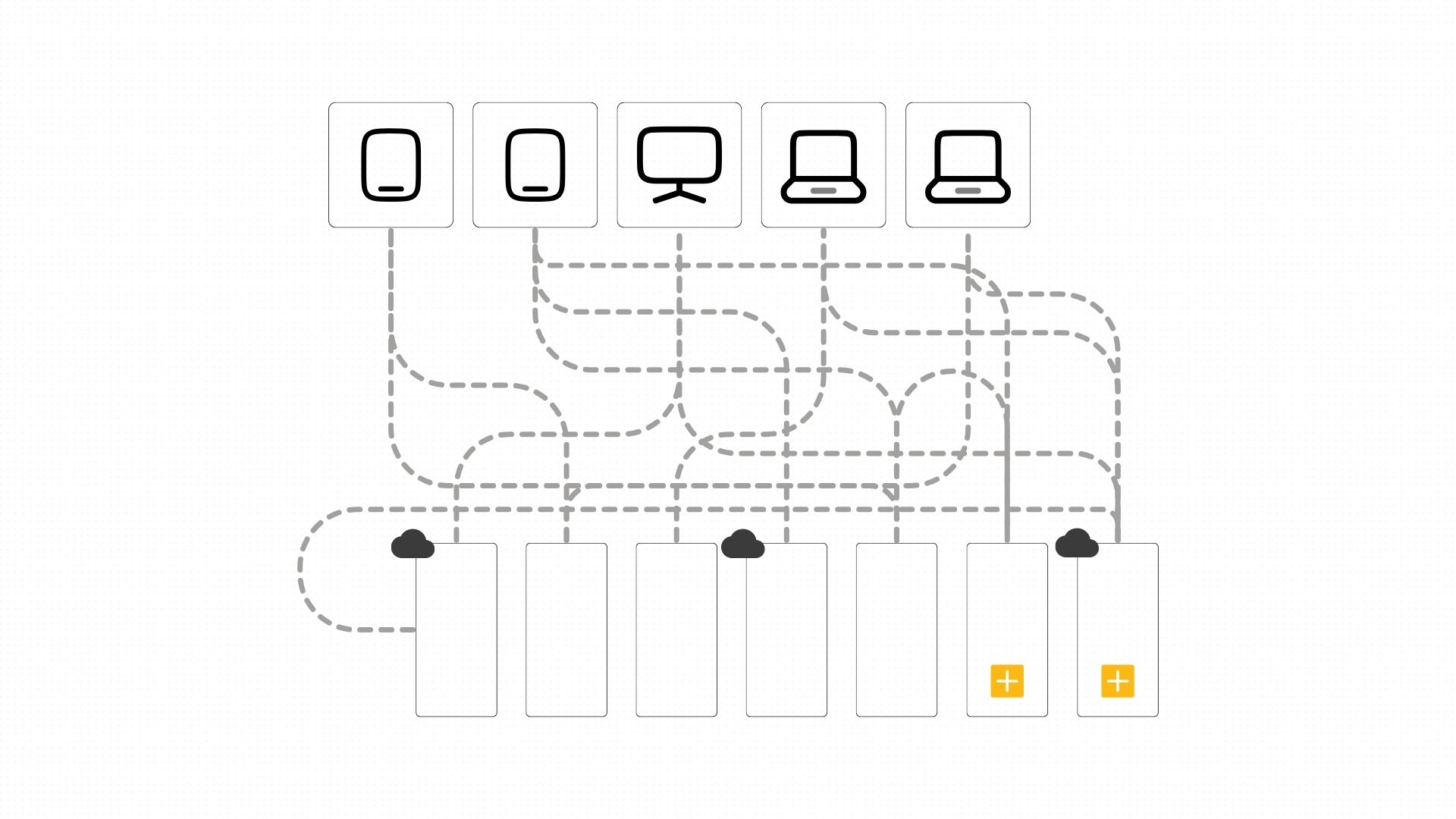

Integration is often treated like plumbing - important, but not strategic. In reality, it’s closer to the nervous system. It determines what your organization can sense, how quickly it can respond, and whether it can act consistently across channels. If you can’t connect to accounts, transactions, limits, policy rules, KYC services, payments, documents, and audit trails at production speed, your AI roadmap turns into theatre: expensive, polished, and non-deployable.

AI raises the bar because it doesn’t just inform decisions; increasingly, it triggers actions. That only works when the underlying access patterns are stable, secure, observable, and reusable. If the enterprise is stitched together with bespoke, point-to-point connectors, every “simple” AI use case becomes a new pile of integration debt wrapped around the initiative’s ankles.

The Integration Tax

Most enterprises don’t struggle because they lack engineers. They struggle because the work is trapped between systems. Each integration pulls in multiple squads, security reviews, release calendars, and brittle mappings. Even when the business case is obvious, the delivery reality is slow and political, because every dependency has its own priorities.

Low-code and no-code promised to help by “letting the business build.” In practice, they often added a new layer that still needed deep integration and governance; so complexity increased, not decreased. The result is a familiar operating model: teams work around IT, processes accumulate manual steps, and innovation quietly slows because shipping anything meaningful becomes a coordination exercise.

Integration-First, Not Integration-Heavy

When people hear “integration layer,” they often picture a multi-year, enterprise-wide initiative with a steering committee and a graveyard of half-finished connectors. That’s not what this is. In practice, most banks and insurers already have plenty of integrations. The problem is that they’re often built as one-off projects - use-case-specific, fragile, and expensive to change - so every new AI idea starts by negotiating with the backlog.

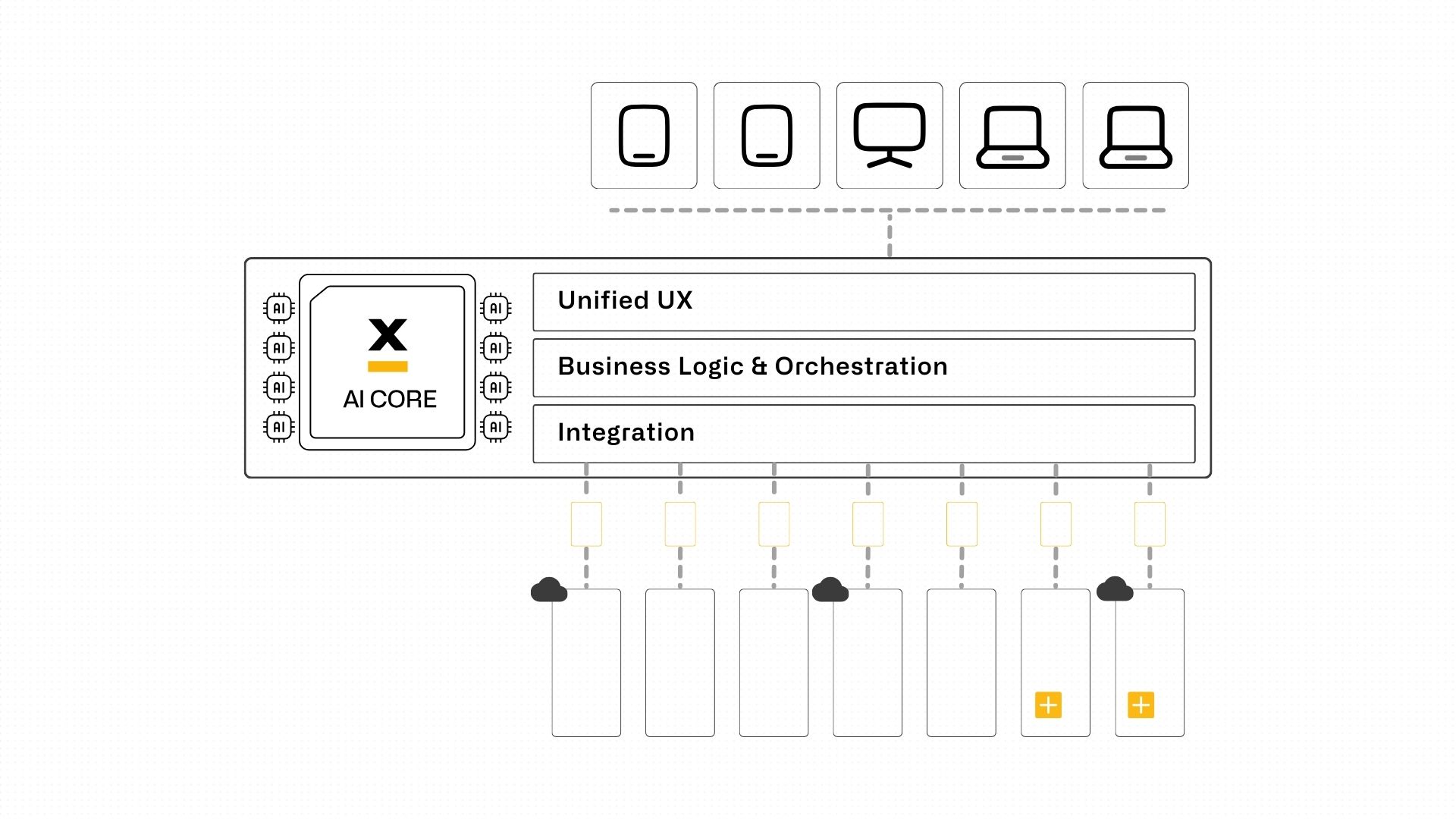

A better pattern is simpler: treat integration as a thin, reusable slice that grows with demand. You don’t “integrate everything” before you do AI. You integrate the minimum set of capabilities needed to make one production use case work end-to-end, then you reuse that work as the foundation for the next one. Integration stops being a gate you must pass and becomes an asset that compounds.

This is also why the strongest AI programs don’t start with “autonomous agents” on day one. They start with connected agents; agents that can reliably fetch the right data from the right systems, show their work, and operate within clear constraints. Once that is stable, autonomy becomes a dial you turn gradually, not a cliff you jump off.

The Use Case That Proves It

A good first production use case isn’t the grand vision of “AI runs the bank.” It’s the kind of work that quietly burns time every day: case preparation, exception triage, document chasing, validation, and handoffs. These are high-volume, rules-heavy processes where teams spend more effort moving information around than making judgement calls.

Take a practical example: Customer 360 for front-line servicing, but scoped like a grown-up. Not “unify the enterprise customer record.” Just: when a customer calls, assemble the context that a human needs in under 10 seconds. That might require only a small set of systems at first; customer profile, recent transactions, active products, and open cases. That’s enough to cut handle time, reduce rework, and stop the ping-pong between teams. The point is not perfection, the point is momentum.

Once that slice is reliable, it becomes reusable. The same “get customer context” capability powers a relationship manager prep pack, a proactive retention workflow, or a dispute investigation assistant. This is where the compounding starts: you’re not building more integrations. You’re reusing a few good ones more intelligently.

Make Governance Feel Lightweight

The biggest fear executives and risk teams have isn’t “AI might be wrong.” It’s “AI might do something we can’t explain, control, or audit.” So the path to production is to make the system operationally trustworthy from day one.

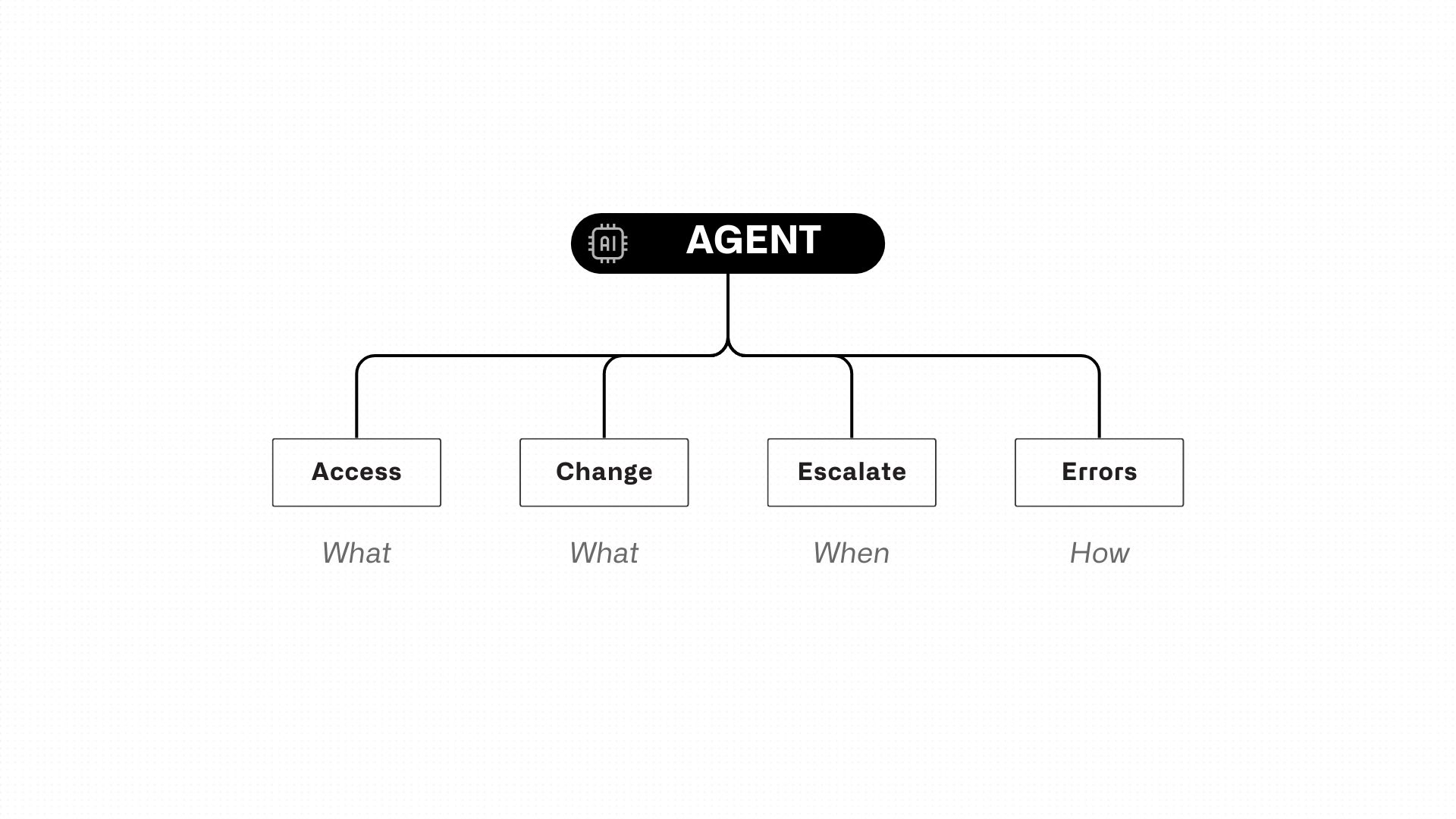

That means your agents should operate inside visible workflows with explicit rules: what they can access, what they can change, when they must escalate, and how failures are handled. The goal is to avoid heroics. No mysterious automation that works until it doesn’t. Instead, you want predictable behavior: if a dependency times out, the agent retries; if data is missing, it requests it; if risk is high, it escalates; if something looks off, it pauses.

When governance is built into the workflow, permissions, audit trails, approval gates, failure paths, it stops feeling like “extra work” and starts feeling like the reason you can move faster safely. This is the difference between AI that lives in demos and AI that can survive a real audit, a real outage, and a real operational day.

The Practical Playbook

If you want this to feel non-heavy, the approach has to be non-heavy. The simplest way is to commit to a small number of decisions and follow through ruthlessly.

Start with one workflow where the pain is obvious and measurable. Connect only the systems required to complete that workflow reliably. Put an agent on top that does real work - assembling context, validating inputs, drafting actions, routing exceptions - while keeping humans in control where it matters. Then measure outcomes in business operations language: cycle time, cost per case, error rates, rework, backlog size, and time-to-resolution.

From there, expand in the most executive-friendly way possible: reuse what you already exposed, and only add the next integration when it unlocks the next chunk of value. That’s how you avoid the “integration initiative” trap. You’re not building infrastructure for its own sake. You’re building capabilities that pay rent immediately, and then keep paying rent across multiple use cases.

The sober conclusion is this: integration layers matter more than most AI proposals admit, but they don’t have to be heavy. Done well, integration becomes the quiet engine that turns AI from a clever assistant into a production capability, one workflow at a time, compounding as you go.

Resources

Bucharest

Charles de Gaulle Plaza, Piata Charles de Gaulle 15 9th floor, 011857 Bucharest, Romania

San Mateo

352 Sharon Park Drive #414 Menlo Park San Mateo, CA 94025

© 2025 FlowX.AI Business Systems